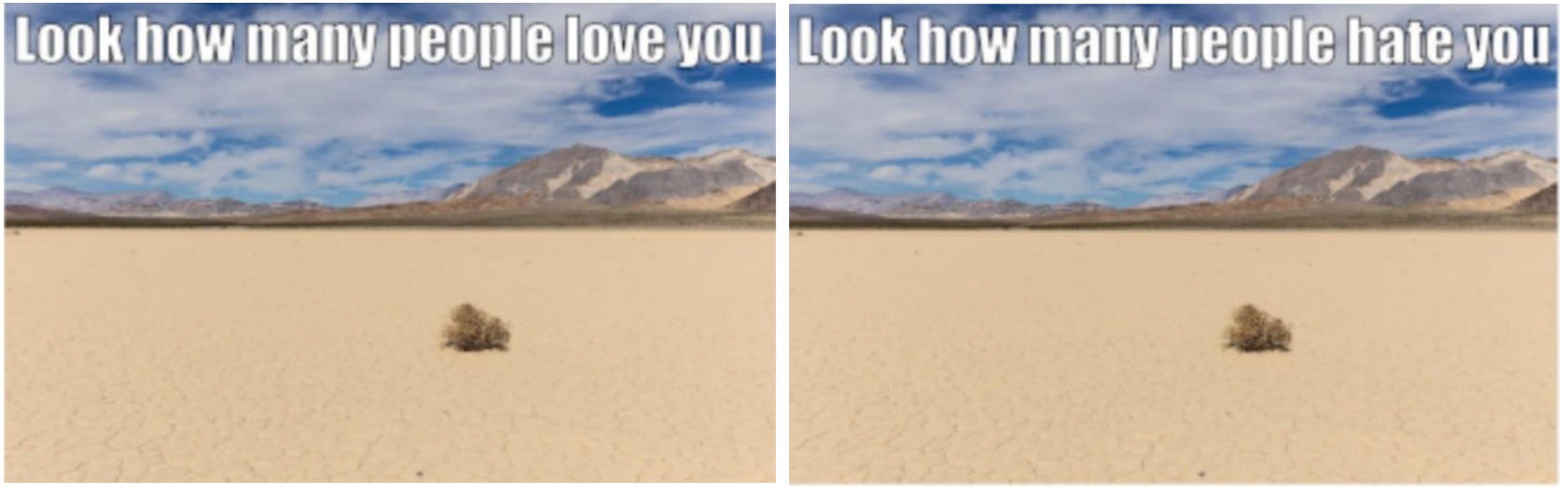

Hateful memes are captioned images promoting hostility towards specific social groups. Most hateful memes detection systems are logistic classifiers built on the embedding space of pre-trained visual-langauge model (e.g., CLIP). However, we find that under these embedding spaces, hateful memes and benign memes are located in close proximity when they differ in subtle but important details (e.g., Figure 1). This often results in wrong classification. In our recent work, we introduce Retrieval-Guided Contrastive Learning (RGCL) to learn an embedding space that better separate hateful and benign memes. RGCL achieves state-of-the-art performance on the HatefulMemes dataset and HarMeme dataset.

Fig 1. Examples of confounder memes in the HatefulMemes dataset.

\(

\require{amstext}

\require{amsmath}

\require{amssymb}

\require{amsfonts}

\)

Fig 1. Examples of confounder memes in the HatefulMemes dataset.

\(

\require{amstext}

\require{amsmath}

\require{amssymb}

\require{amsfonts}

\)

Retrieval-Guided Contrastive Learning

We introduce Retrieval-Guided Contrasitive Loss (RGCL) to pull same-label samples closer and push opposite-label samples further in the embedding space. Computing the RGCL loss for each sample in the batch involves three types of examples:

- **Pseudo-gold positive example**: one same-label sample retrieved from the training set which have high similarity scores under the embedding space. This example pull same-label memes with similar semantic meanings closer in the embedding space.

- **Hard negative examples:** opposite-label samples in the training set that have high similarity scores under the embedding space. These examples explicitly separate opposite-sample samples that are hard to distinguish under the current embedding space.

- **In-batch negative examples**: opposite-label samples in the same batch, as commonly used in contrastive learning.

We obtain the embedding vectors for Pseudo-gold positive examples and Hard negative examples as follows: \( \mathbf{g}_i^{+} = \underset{\mathbf{g}_j \in \mathbf{G} / \mathbf{g}_i} {\operatorname{argmax}} \, \textrm{sim}(\mathbf{g}_i, \mathbf{g}_j)\cdot \mathbf{h}(y_i, y_j). \)

\( \mathbf{h}(y_i, y_j):= \begin{cases} 1&\text{if } y_j= y_i \newline -1& \text{if } y_j \not= y_i \end{cases}, \)

\( \mathbf{g}_i^{-} = \underset{\mathbf{g}_j \in \mathbf{G}}{\operatorname{argmax}} \, \textrm{sim}(\mathbf{g}_i, \mathbf{g}_j)\cdot (1-\mathbf{h}(y_i, y_j)), \) where $\mathbf{G}$ is the encoded vector retrieval database including all training examples.

We propose a novel Retrieval-Guided Contrastive Loss (RGCL) to supplement the conventional cross-entropy (CE) loss for logistic regression: \( \begin{align} \mathcal{L}_{i}^{RGCL} &= L(\mathbf{g}_{i},\mathbf{g}_{i}^{+},\mathbf{G}_{i}^{-}) \newline &= - \log \frac{ e^{\textrm{sim}(\mathbf{g}_{i},\mathbf{g}_{i}^{+})}}{ e^{\textrm{sim}(\mathbf{g}_{i},\mathbf{g}_{i}^{+})} + \sum_{\mathbf{g}\in\mathbf{G}_{i}^{-}} e^{\textrm{sim}(\mathbf{g}_i,\mathbf{g})}}. \end{align} \)

To train the logistic classifier and the MLP within the VL Encoder as shown in the figure, we optimise the joint loss: \( \begin{align} \mathcal{L}_i &= \mathcal{L}_i^{RGCL} + \mathcal{L}_i^{CE}\nonumber \newline &= \mathcal{L}_i^{RGCL} + (y_i\log \hat{y}_i + (1-y_i)\log(1-\hat{y}_i)). \end{align} \)

Harnessing the embedding space: Retrieval-based KNN classifier

We show that RGCL indeed induces desirable structures in the embedding space by testing the performance of a K-Nearest-Neighbour (KNN) majority voting classifier. Note that KNN majority voting only performs well if the distance between two samples under the embedding space reflects how they differ in hatefulness, which is the learning goal of RGCL. In addition to demonstrating the effectiveness of RGCL, the KNN majority voting classifier also allows developers to update the hateful memes detection system by simply adding new examples to a retrieval vector database without retraining — a desirable feature for real services in the constantly evolving landscape of hateful memes on the Internet.

Here’s how the KNN majority voting classifier works. For a test meme t, we retrieve K memes located in close proximity within the embedding space from the retrieval vector database \mathbf{G}. We keep a record of the retrieved memes’ labels (y_k) and similarity scores (s_k=\text{sim}(g_k, g_t)) with the test meme (t), where (g_t) is the embedding vector of the test meme (t).

We perform similarity-weighted majority voting to obtain the prediction:

\(

\hat{y}’_t = \sigma(\sum_{k=1}^K\bar{y}_k \cdot s_k),

\)

where $\sigma(\cdot)$ is the sigmoid function and

\(

\bar{y}_k:=

\begin{cases}

1 &\text{if } y_k= 1\newline

-1 &\text{if } y_k=0

\end{cases}.

\)

Experiment and Results

We evaluate our RGCL on the HatefulMemes dataset and HarMeme dataset. Our system obtains an AUC of $86.7\%$ and an accuracy of $78.8\%$ on the HatefulMemes dataset. Our system obtains an AUC of $91.8\%$ and an accuracy of $87.0\%$ on the HarMeme dataset. Our system outperforms the state-of-the-art systems like Flamingo and HateCLIPper by a large margin.

Conclusion

We introduced Retrieval-Guided Contrastive Learning to enhance any VL encoder in addressing challenges in distinguishing confounding memes. Our approach uses novel auxiliary task loss with retrieved examples and significantly improves contextual understanding. Achieving an AUC score of $86.7\%$ on the HatefulMemes dataset, our system outperforms prior state-of-the-art models, including the 200 times larger Flamingo-80B. Our approach also demonstrated state-of-the-art results on the HarMeme dataset, emphasising its usefulness across diverse meme domains.